Why are modern AI models getting faster despite growing in size? David Lott explains "Mixture of Experts" (MoE) and why this architecture is a game-changer for business IT efficiency.

David Lott

on

Nov 25, 2025

Mixture of Experts (MoE): The Secret Behind Efficient and Scalable AI

The current landscape of Artificial Intelligence presents a fascinating paradox. If you follow the headlines, you hear a constant drumbeat about the insatiable hunger for hardware: better models require massive data centers, ever-increasing computing power, and an incredible amount of energy.

But if you look closer at the actual technology releasing this year—like the latest models from Mistral or Deepseek—you see something different. These models are achieving significantly better results with fewer active resources.

How does that add up? How can we scale intelligence without bankrupting our energy budget?

The answer lies in a smart architectural concept that is currently redefining how we build Large Language Models (LLMs): Mixture of Experts (MoE).

Short on time? Here, I explain Mixture-og-Experts in brief:

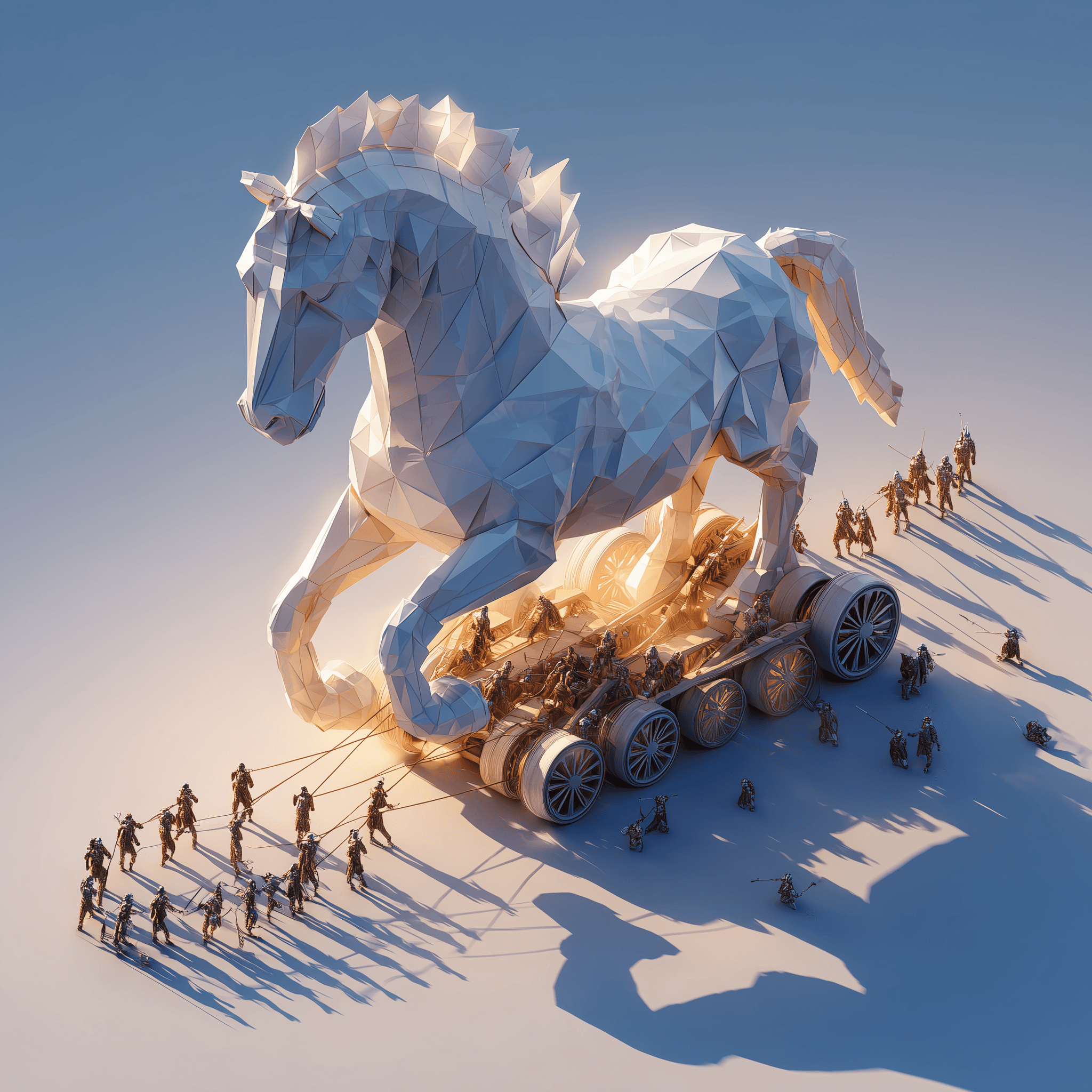

The End of the "Know-It-All" Monolith

To understand why MoE is such a breakthrough, we first have to look at how we used to build AI. Until recently, most leading LLMs were so-called "dense models." Think of a dense model as a single, gigantic brain. Every time you asked it a question—whether it was "What is the capital of France?" or "Write a Python script for encryption"—the entire brain had to light up.

Every single parameter was activated for every single token generated.

This is inefficient. It’s like hiring a team of 100 people and forcing every single one of them to attend every meeting, regardless of the topic. It wastes energy and slows down the process.

Enter the Mixture of Experts (MoE)

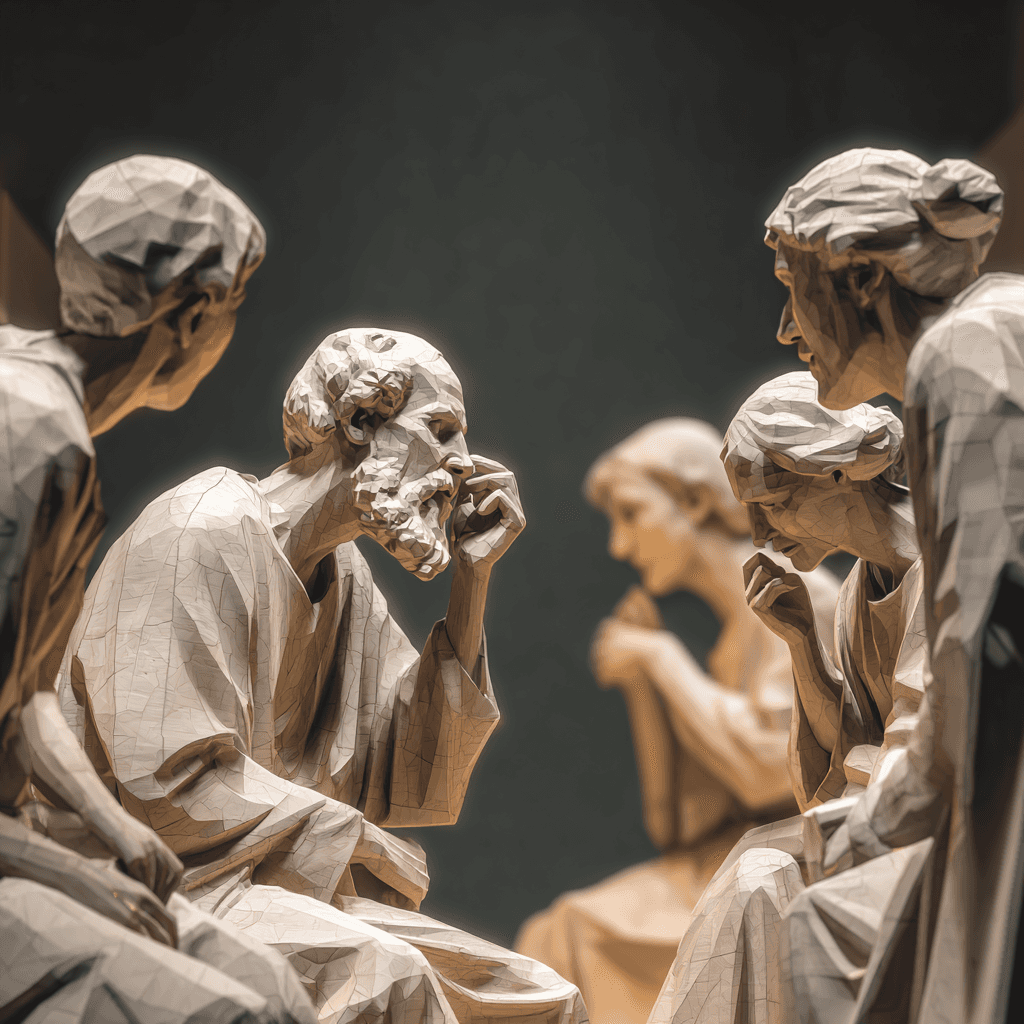

Mixture of Experts flips this logic on its head. Instead of building one colossal "generalist" that fires on all cylinders constantly, MoE constructs a team of highly specialized sub-models.

Imagine a virtual room full of experts:

One is a genius at mathematics.

One specializes in historical context.

One is an expert in coding and syntax.

Another might just be really good at detecting sarcasm.

This is the core architecture of MoE. The model isn't a monolith; it’s a collection of these specialized "experts."

The "Manager": The Gating Network

How does the model know which expert to ask? This is where the Gating Network comes in. Think of it as a smart project manager or a triage nurse.

When you send a prompt to an MoE model, the Gating Network analyzes your request in real-time. It determines that your specific query doesn't need the history buff or the sarcasm detector. Instead, it routes the input only to the specific experts needed for that job—usually just top-2 or top-3 experts out of dozens.

These experts process the information, their outputs are combined, and you get your answer.

Sparsity: Achieving "Spärlichkeit"

The technical term for this efficiency is Sparsity (or in German, Spärlichkeit).

Here lies the magic trick: The total model can be incredibly massive. We are talking about trillions of parameters, containing a vast depth of knowledge. But for any single inference (query), only a tiny fraction of those parameters are actually active.

This allows us to:

Scale Knowledge: The model can learn significantly more information because the total parameter count can be huge.

Reduce Latency: Because we only activate a small slice of the model, the computation is faster.

Lower Costs: Less compute per query means lower energy bills and lower inference costs.

Why This Matters for IT Decision Makers

As a CISO or CEO, you might be wondering: "Why should I care about the underlying architecture?"

Because architecture dictates feasibility and sovereignty.

At SafeChats, we focus heavily on sovereign AI and secure communications. The MoE architecture is a critical enabler for running powerful AI in restricted environments. Because these models are computationally efficient, high-performance AI doesn't necessarily require a hyperscaler data center anymore. It opens the door for running sophisticated models on-premise or in private clouds—ensuring your data never leaves your control.

The trend is no longer just about being "bigger." It’s about being smarter. Mixture of Experts allows us to have the best of both worlds: the broad knowledge of a giant model with the speed and efficiency of a specialist.

Ready for Sovereign AI?

Efficiency and security go hand in hand. If you are looking to deploy AI communication tools that respect your data sovereignty and utilize the smartest tech available, we should talk.

Test SafeChats today or book a personal demo with me to see how we secure your corporate communication.